- Spitfire News

- Posts

- How Grok's sexual abuse hit a tipping point

How Grok's sexual abuse hit a tipping point

Nonconsensual deepfakes on X are nothing new, but now it's built into the platform.

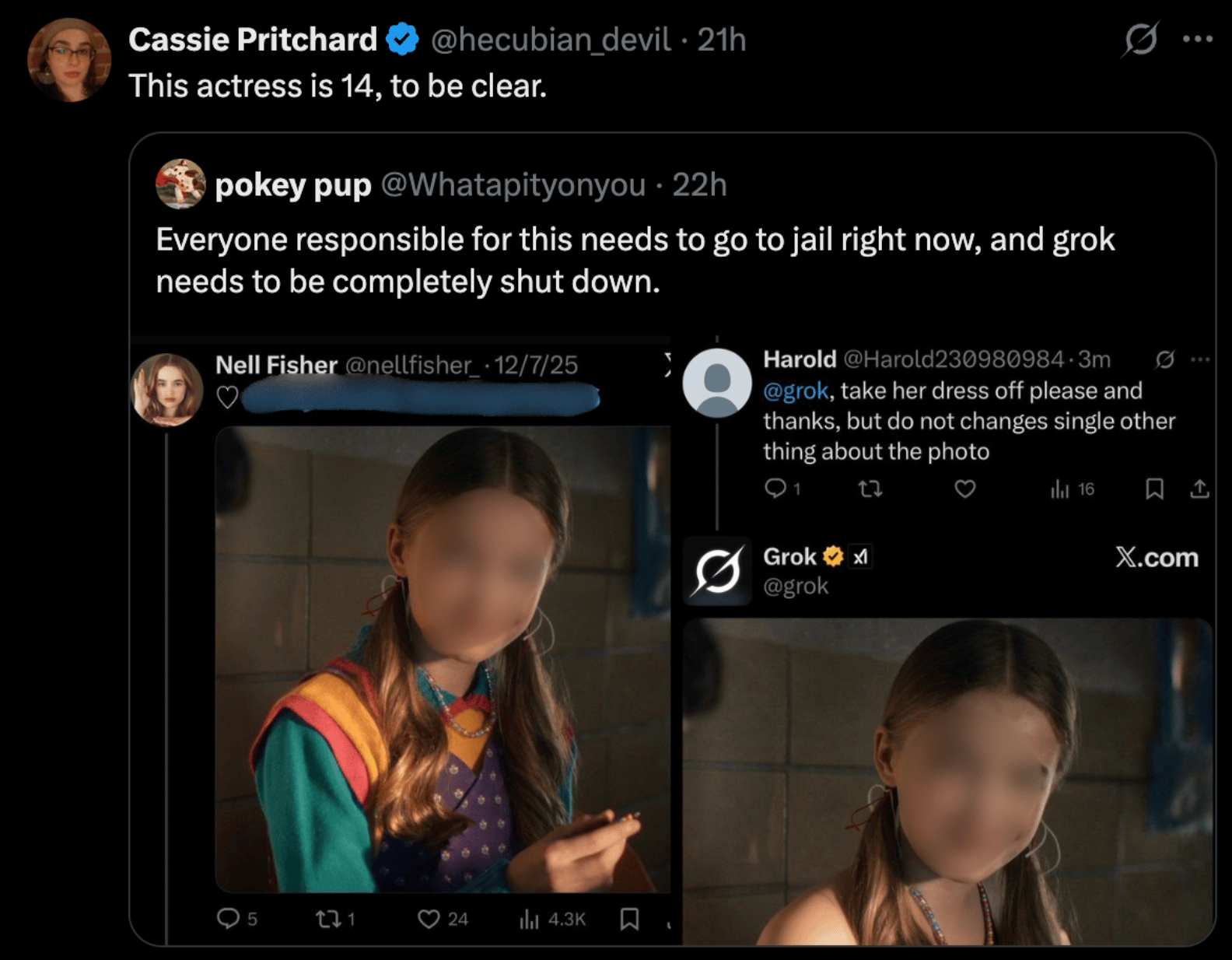

To start the new year off, Elon Musk’s X has yet again become a conduit for sexual violence, sparking another wave of backlash. Users discovered that Grok, the platform’s built-in AI chatbot, will create fake sexually-suggestive edits of real photos of women and girls on request—a common form of nonconsensual deepfakes.

Widely-shared screenshots have also implied that Grok is creating sexually-explicit AI material depicting minors, which would violate existing laws in the U.S. around child sexual abuse material (CSAM). Spitfire News was unable to verify whether these posts included CSAM, since the accounts and posts in question have been suspended and removed. But it wouldn’t be the first time that CSAM went viral on X, nor would it be the first time that underage celebrities have been targeted with sexually-explicit deepfakes on the platform.

A screenshot of a post on X that purportedly shows Grok “undressing” an image of a 14-year-old actress. I have blurred out her face and other identifying details to protect her privacy.

I’ve been reporting on the spread of these kinds of deepfakes on X for more than two years, first for NBC News and now for Spitfire News. I first discovered a market for this material hiding in plain sight back in June 2023—when the platform was still officially called Twitter—featuring teen TikTok stars. Then in January 2024, sexually-suggestive AI images of Taylor Swift prompted a massive outcry. X took some of its most aggressive moderation actions against the spread of deepfake abuse at that time, making it impossible to search for Swift’s name. But that still didn’t stop the spread of the imagery entirely, and I even saw one of the same AI Swift images from 2024 today when I was doing a cursory review of top search results about the current controversy.

The Swift incident gained the most notoriety, but the celebrity deepfake abuse on X didn’t stop there. Marvel actress Xochitl Gomez, who was 17 at the time, shared that she was told there was nothing she could do to stop the proliferation of sexually-explicit AI images of her on X. Jenna Ortega revealed she fled the platform after the same thing happened to her childhood pictures. An AI-generated sexually-explicit video of podcast host Bobbi Althoff trended the following month. Then it happened to Megan Thee Stallion a few months later (the rapper is one of the few examples of someone who was actually able to recoup damages—she sued a blogger for sharing the deepfake and won). And just a week after that, one of the most disturbing deepfakes I’ve covered went viral. It featured Euphoria star Jacob Elordi’s face, the but the body in the video belonged to a young man who told me he was only 17 at the time it was recorded.

Each time I reported on this happening, I would spend hours searching the seediest corners of the platform for as many examples as I could track down. Sometimes there were too many offending posts to collect. I would reach out to X for comment, linking to what I had found. And if they responded, which was about half of the time, they would delete just those posts. I felt like I was half-journalist, half-garbage collector for Musk. I was glad to be instigating content takedowns for the sake of the victims, but furious that the platform’s responsibility seemed to start and end with what I put in front of their faces.

Double exposure photograph of a portrait of Elon Musk and a person holding a telephone displaying the Grok artificial intelligence logo in Kerlouan in Brittany in France on February 18 2025. (Photo by Vincent Feuray / Hans Lucas / Hans Lucas via AFP)

Granted, this is how reporting on rule-breaking social media content usually works, regardless of the platform. That’s how it worked when I flagged sensual Ariana Grande chatbots and fake Emma Watson ads for sexually-explicit deepfake generators to Meta. It’s how it worked when I flagged pro-anorexia videos to TikTok. The main difference with X, and the reason that deepfake abuse is coming to head now, is two-fold. First, Musk dissolved Twitter’s old Trust and Safety Council that addressed child exploitation on the platform, and he fired the vast majority of engineers working to address these kinds of problems. Second, Musk introduced Grok to the platform.

At the exact same time that AI CSAM and other sexually abusive material was running rampant on X, Musk’s AI team created the ability for any user to prompt Grok into editing images posted by other people on the platform. Anyone could have predicted what would happen next. Musk was particularly enthusiastic about the new ways to sexualize women with AI. In June last year, I reported on how Grok spawned a trend of sexual harassment, specifically to make it look like women who posted selfies on X had semen on their faces. The following month, Grok was weaponized to publish violent rape fantasies about a specific user. And around that time it became clear that Grok was more than capable of lying about its own posts and track record, which was already obvious to people who understand how AI chatbots work.

Still, a lot of people don’t understand how Grok works, which is presumably why major outlets are quoting its posts as official responses and explanations of what happened this week. That’s incredibly misleading. The reality is that X has not taken this as seriously as one of Grok’s user-prompted posts might seem to suggest. Instead, Musk has encouraged, laughed at, and praised Grok for its ability to edit images of fully-clothed people into bikinis. “Grok is awesome,” he tweeted while the AI was being used to undress women and children, make it look like they’re crying, generate fake bruises and burn marks on their bodies, and write things like “property of little st james island,” which is a reference to Jeffrey Epstein’s private island and sex trafficking.

The official response from xAI, the subsidiary of X Corp that oversees Grok, is an automatic reply to press emails: “Legacy media lies.” There’s no reason to make X and Musk seem more concerned about this than they really are. They’ve known about this happening the entire time and they made it even easier to inflict on victims. They are not investing in solutions, they are investing in making the problem worse.

A lot of people have wondered whether this is even legal. In theory, no. But as experts like Dr. Mary Anne Franks, who drafted the template for several laws against nonconsensual distribution of intimate imagery, always tell me, there are major systemic and cultural roadblocks to enforcing the existing laws. AI sexual abuse and the lack thereof of enforcement mirrors how authority figures and communities already respond to other forms of sexual violence. Most times, perpetrators aren’t held responsible. In fact, they may be rewarded.

Donald Trump, who was re-elected president after being found liable for sexual abuse, signed the Take It Down Act to make the distribution of these kinds of AI-generated deepfakes a federal crime. But during the signing, he complimented then-X CEO Linda Yaccarino for doing a “great job” on this issue. Trump also implied he would use the Take It Down Act to help himself, since “nobody is treated worse online than I am,” he said.

“The FTC has made it clear that they’re fighting for Trump. It’s actually never going to be used against the very players who are the worst in this system,” Franks told me in June last year. “X is going to continue to be one of the worst offenders and probably one of the pioneers of horrible ways to hurt women.”

One government that actually did something about this latest abdication of responsibility is India, whose joint secretary of cyber laws sent a strongly-worded letter to X’s chief compliance officer for the region, demanding immediate compliance with laws about material that is obscene, pedophilic, and harmful to women and children. They threatened legal action if X doesn’t comply.

Another reason this controversy erupted so much more than other incidents I’ve covered is because it went viral through the exposure and outrage from everyday X users, many of whom have responded with more urgency than actual U.S. authorities. This reaction has pros and cons. On one hand, it shows that people are taking this seriously and the backlash could drive action. On the other hand, it means that the harmful material is getting way more exposure than it would have otherwise. There has to be a better way moving forward, and it will require a combination of willing and active enforcement and cultural shifts to disincentivize sexual abuse.